InterPlanetary File System

InterPlanetary File System

Of course I don’t agree with pretty much any of the video content, I’m not going to link here as I don’t want to generate additional traffic for something I think is mostly ill-informed. That said, there were bits, especially content related to NFTs where I thought ‘hmmm, I can see how they came to that conclusion’. What I am interested in is the comments related to IPFS specifically, which actually have some really interesting usecases.

IPFS is real!

IPFS is far from a scam, it’s an incredible innovation that can really change how we access web resources, something that is being developed actively by big proper companies like Netflix for example.

A little background

The internet, like most things in computing, seems quite cyclical; what was once the way to do something, evolved and evolved again and eventually we came back to how we used to do it, but with newer tech and ideas.

So with Web1, especially in the early days, the wild west if you will, it was not uncommon for people to build their own web pages about whatever content they felt passionate about. Finding content was down to word of mouth or, more likely, a website about model trains would link to other websites about model trains. The early web traffic was more about community than harvesting all of your information to sell you something, communities formed around the content they were interested in.

To help us find all of this great content, people started making internet directories, the directory websites were probably some of the earliest websites to feature advertising as people paid to get their stuff in front of eyeballs that were looking for something else. The race to make the best directory, to ensure that your directory was where people started, eventually led to the Search Engines we know today.

This, perhaps unintentially, conditioned us to look for all information in a single place. For example, we might go to Google for a blanket web search, an image search, a video search, news content, etc. The longer Google can keep people there, the more they can sell adverts to companies that sponsor search terms.

This isn’t a post about search engines…

Web1 was all about the content creator building a site and owning the content.

Web2 was all about centralisation. Making it easier for people to host content, as in, instead of me building a platform and then posting a blog entry, uploading my images, linking to other posts, etc, I could simply go to a central location and post my content there. They did all the heavy lifting, they built the infrastructure, it all looks the same, it’s tidy, it links to similar content, it’s usually SEO optimised, meaning that search engines can find it for us.

There are downsides, many in fact, but the main one in my mind is who owns the content? Quite often, companies like Facebook own the content that you post. You can download it, you can delete it, but it is forever on their servers (or at least as long as they want it or whatever their retention policy is). You rely on one, or a few services, if they change a policy or they have a failure, then potentially millions of people can be affected, your content might have been censored (not always a bad thing) or it may just be unavailable.

Web2 is espeically evident in the Social Media space. We’re used to going to a small collection of websites from which we can access huge swathes of content and opinion.

The drawbacks of centralisation include:

- Relying on a single entity (if they have a tech issue, lots of users are affected)

- Dancing to someone elses tune (if they no longer agree with your content, it’s gone)

- Ownership is a questionmark

- Profiting from your content (your content could be making them very rich, where is your cut?)

- keyboard warriors can be quite vocal, drawing in others with similar opinions

- How do we know the content was not tampered with?

- Who originally produced the content, who deserves the credit?

On the point of the keyboard warriors, they can attack any space that is open for comment, but the number of them on places like Facebook and Twitter can be really harmful, especially to vulnerable people.

So what is an IPFS?

IPFS or InterPlanetary File System is a Secure, Resilient and Efficient web hosting service. This is web hosting for the Web3 era.

One of the biggest benefits of IPFS is being able to prove if the content was modified. I’d say that the second biggest benefit is being able to prove who was the original owner of the content.

Content theft is common phenomena in social media, and it is not uncommon for influencers with large followings to take content from less popular creators without permission. A user can protest if they find the copy, but if the content is stolen from one platform and uploaded onto another, it becomes much more difficult for the creator to claim their content. With Web3, it’s easy (or easier) to prove who came up with the content first.

Content Addressing

Currently, finding a resource on the web usually begins with a visit to your favourite search engine, but to get to the search engine, you type an address, such as www.bing.com - this is a human readable address, in the background this address is converted, using DNS to a numeric address in the form of 104.86.110.107 for example. This is known as Location Addressing. The way Web2 (and Web1) work is that a DNS server says that X numeric address is the target host for www.bing.com. The host can return anything, if the host has been hacked, the content returned to the user may be not what they were expecting, it’s also possible for the DNS server to be hacked, pointing requests to different or unintended websites entirely.

Content Addressing takes a different approach, identifying the content through a unique Content ID (CID) using cryptographic hashing which is a type of fingerprint for the data. It ensures that what you receive is what was expected.

Distributed Content

One of the benefits of the Web2 space was that once so much traffic was visting single web resources, it was considered fruitful to distribute that content with things like CDNs and regional replication. If we consider earlier version of web chosting, it was not uncommon to host a website at home. That meant that if a website had content that appealed to people in other parts of the world, they might find the latency to be negative.

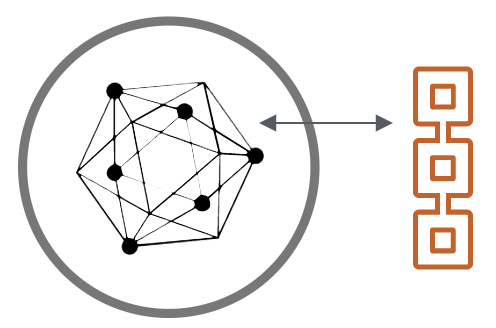

Web3 will continue to offer replicated and distributed hosting using a Distributed Hash Table (DHT) to fetch the content through a peer-to-peer network. Essentially, the data is sliced up into chunks allowing each pece to be securely shared. If a peer goes down, the data is still available within the network. This is quite similar to how things like BitTorrent work.

How does it all work?

When you upload a file to an IPFS, it gets split into smaller pieces and distributed across hosts in the network. Each piece of the file has a hash and there is a hash for the complete file as well.

Because each part of the file is hashed, the file is immutable (it cannot be changed) any change to any part of the file will change the hash and the network will recognise the file as being tampered with, ignore that and instead look for a part of the file that has not been tampered with from the rest of the network.

Of course, you may see a potential drawback, perhaps the creator notices an error, if the file is immutable, how can they make any corrections without wasting space and decreasing efficiency?

Version Control

The IPFS network has a form of version control, allowing the file to be modified by the owner which allows for corrections or amendments to be stored without having to duplicate the entire piecce of work.

To my mind, this works similar to how a Riverbed Steelhead works in that once the original is hashed and stored, any changes to the original are stored as a differential only. So when the file is delivered to a viewer, they might get 99% of the original file and the 1% of the amended file. This means that a changed file doesn’t need to be saved again, only the differences between the original and the new file are saved. This is highly efficient improving both latency and reducing space needed.