Mastering Prompt Engineering

In the rapidly evolving world of AI, the ability to generate high-quality outputs from Language Models (LLMs) hinges on one crucial skill; Prompt Engineering.

Mastering Prompt Engineering: The Key to High-Quality LLM Outputs

Crafting the right prompts is both an art and a science, and it’s the key to unlocking the full potential of LLMs. In this post, I’ll explore the essential steps required to master prompt engineering and consistently achieve superior results.

1. Understand the Task and Define the Objective

Before diving into prompt creation, it’s critical to have a clear understanding of what you want to achieve. Define the objective by considering the following:

- Purpose: Are you generating text, answering questions, summarizing content, translating languages, or performing another task?

- Audience: Who is the intended audience? What is their knowledge level and interest?

- Format: What format should the output take? (e.g., list, paragraph, dialogue)

2. Use Clear and Specific Instructions

LLMs respond best to clear and specific prompts. Ambiguity can lead to varied and often undesired responses.

- Explicit Instructions: Clearly state what you want the model to do.

- Example: Instead of “Tell me about dogs,” use “Provide a detailed description of the physical characteristics, behavior, and typical diet of domestic dogs.”

3. Provide Context

Providing lots of context helps the model generate more relevant responses, you can think of this as describing the persona you want the LLM to adopt when creating the output.

- Background Information: Include any necessary background information that can guide the model.

- Example: “As a historical expert, provide an analysis of the causes of the French Revolution.”

4. Use Examples

Examples in your prompts can guide the LLM in generating the desired type of response.

- Demonstrative Examples: Show what kind of output you expect.

- Example: “Generate a list of three benefits of regular exercise. For example: 1. Improved cardiovascular health…”

5. Control the Length and Style

Specify the desired length and style to ensure the output matches your needs.

- Length: Specify word count, number of sentences, or other length constraints.

- Example: “Write a 200-word summary of the following text.”

- Style: Specify tone, formality, and style.

- Example: “Write an informal blog post about the benefits of yoga.”

6. Use Step-by-Step Prompts

For complex tasks, breaking down the prompt into smaller steps can help in achieving accurate and detailed responses.

- Incremental Instructions: Guide the model step-by-step.

- Example: “First, describe the key events of World War II. Then, explain the impact these events had on the global political landscape.”

7. Iterate and Refine

Prompt engineering is an iterative process. Refine your prompts based on the output you receive.

- Feedback Loop: Analyze the output and adjust the prompt accordingly.

- Example: If the output is too vague, add more specific instructions or context.

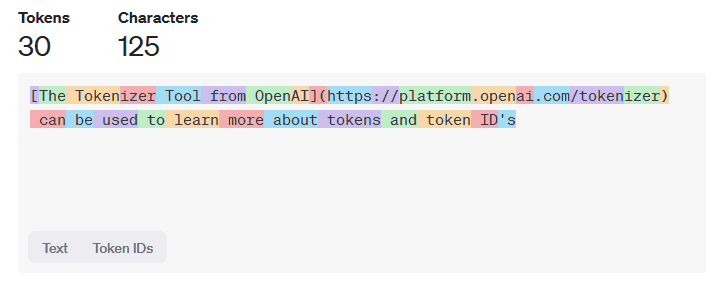

8. Use Special Tokens and Keywords

Some LLMs understand special tokens and keywords that can guide the generation process more precisely.

- Special Tokens: Use tokens like

<START>and<END>to delimit specific sections.- Example: “

Write a conclusion for an essay on climate change ”

- Example: “

9. Experiment with Different Prompts

Don’t hesitate to try various prompts to see which one yields the best results. Experimentation is key to mastering prompt engineering.

- Variety of Approaches: Test different wording, context, and examples to find the optimal prompt.

10. Stay Updated with Best Practices

LLM technology is evolving rapidly. Stay informed about the latest research and community best practices.

- Community and Research: Follow blogs (like this one), forums, and research papers on prompt engineering and LLM advancements.

Conclusion

High-quality prompting is essential for high-quality outputs. By understanding the task, using clear instructions, providing context, using examples, controlling the length and style, employing step-by-step prompts, iterating and refining, using special tokens, experimenting, and staying updated with best practices, you can significantly improve the outputs generated by LLMs.

Mastering prompt engineering is not just about technical prowess; it’s about communicating clearly and precisely with the AI to achieve the best possible results.

In the world of Prompt Engineering; Natural Language is the hottest new programming language!